SOC is defined as: “The combination of people, processes and technologies that protect the information systems of an organization through effective design and implementation, continuous monitoring of system status, detection of unintentional or unpleasant situations, and minimizing damage from unwanted consequences.” But there are many words that are often used interchangeably when people describe a security operating centre. We asked what the SOC was doing internally, by outsourcing, or both. The ability to identify and respond to news is an integral part of SOCs and is often an internal skill. The design, planning, and management of security are common functions, such as ensuring that the organization’s IT systems comply with legal and industry requirements. Technological security assessments (such as entry and risk testing), a collection of threatening intelligence and applications, and the purple team are rare, but still exist.

The Safety Operations Centre (SOC) is made up of five different modules: event generators, event collectors, message sites, analytics engines and response control software. A major problem encountered in the formation of the SOC is the integration of all these modules, which are usually built as separate components, while comparing the availability, integrity, and security of data with their transmission channels. In this paper we will discuss the practical structures needed to integrate those modules and introduce the concepts behind each module and briefly describe the common problems encountered with each of them, and finally design the global structure of the SOC.

SOC Modules

Event Generators

We can differentiate between two main Event Producer families: event-based data generators (i.e. sensors), generating events based on a particular OS activity, applications or network, and status-based data generators (i.e.., Generating an event based on an external update like and ping, to check data integrity or to check daemon status.

Sensors

The most popular types of sensors are IDS, either network-based or network-based. We can also add to this category almost any filtering system (network, application or user-based) that provides logging, e.g. firewalls, ACL drives, switches and Wireless HUBs using MAC address restrictions, RADIUS servers, SNMP stacks, etc. In excess, honey jars and network scents should also be considered sensors. In the latter case, each smelling pack can produce an event!

Each sensor should be considered as an independent agent operating in a hostile environment and, with the same features – effective, tolerant, anti-depressant, slightly elevated, adjustable and flexible, scalable, provide good service degradation and allows. powerful reorganization.

Pollers

The coolers are a kind of event generator. Their job is to produce an event where a particular situation is discovered in a third-party system. A simple analogy will be made with Network Management Systems. In this case, the polling station checks the system status (via ping, snmp for example). If the system appears to be down, the polling station produces a warning to the Management Station.

In a special security situation, voters will have a greater responsibility to check the status of the service (DoS detection) and data integrity (usually web page content).

Collectors

The purpose of the collector is to collect information from different sensors and translate it into a standard format, so that there is a common message base. Once again the availability and size of these boxes seems to be a major concern. However, such features can be handled in the same way as those used for any other server service, using clusters, high availability and limited loading of dedicated hardware / electrical hardware, etc. The standard formatting of collected data (second point described above), appears. very theoretical and there is still controversy surrounding the security community. The IETF works with message formatting standards and transmission processes. However, illegal extensions appear to be already necessary for the purposes of integrated integration and management of distributed nerves.

Message Databases

Databases are the most common modules we find in SOC formation. They are a website. The only specific function of SOC to be performed at this level is the basic level of relation to identify and integrate duplicates from the same or different sources. Despite the old worries about database availability, integrity and confidentiality, Message Database will be more sensitive to operational issues as sensors may generate large amounts of messages per second. Such messages will need to be stored, processed, and analysed as soon as possible, in order to allow for timely responses to entry or success attempts.

Analysis Engines

Analysis engines are responsible for analysing events stored in Information. They must perform a variety of tasks to deliver appropriate warning messages. This type of operation is probably the most current research focused on, be it based on associated algorithms, false message detection, statistical representation, or distributed functionality. However, the diversity of that research and the initial phase of implementation (especially limited to conceptual evidence) lead to the development of a module that is a very important and unusual part of the SOC. It is clear that the analysis process requires input from the site where the login features are stored, a protected system model and security policy.

Reaction Management

Reaction Management Systems is a common term used to describe a combination of responses and reporting tools used to respond to annoying incidents that occur on or under surveillance systems. Experience shows that this is a very thoughtful concept, as it involves GUI ergonomics, security policy reinforcement, legal restrictions, and contract SLAs by a client-led steering team. These consecutive issues make it impossible to explain anything other than advice and best practice based on what is happening in real life over time. However, the importance of Reaction Management Systems should not be underestimated, as the intervention effort may be well analyzed and appropriate but all operations will be in vain if no appropriate response can be initiated during the appropriate delays. The only possible response at the time would be a notorious “post-mortem analysis”.

SOC Architectures

SOC may be a one-room business, or it may be a type that is distributed worldwide, following the day of the building. The most common, so far, is one central SOC that communicates with all data. This centralization is problematic due to data protection laws and regional variability requirements, as well as an understanding of the systems used. Interestingly, a small percentage of these respondents will be leaving the building next year.

Many organizations are moving to cloud services through their SOC facilities. This approach recognizes the advantages often associated with IT cloud services: error tolerance and the concept of low operating costs. Cloud service providers are shutting down and while most fall within the scope of published SLAs, SOCs may have regulations (GDPR Europe and others), as well as important requirements that require more time for specific tools and processes.

The following Basic Architecture:

Technology in Use

Most organizations think technically rather than the processes and people involved when planning to build a SOC. This is usually because it is easy to measure the technical aspect of the SOC. In addition, technology is needed, so it needs to be purchased and used.

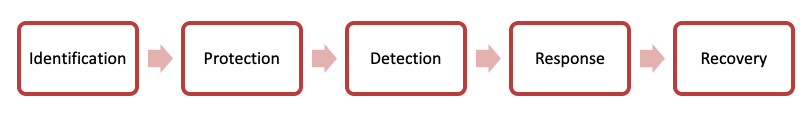

We have broken down the sections of the NIST Cybersecurity Framework to integrate technology into identification, protection, detection, response, and recovery roles, recognizing that many tools have multiple functions. While these sections are useful in demonstrating the skills required by managers, there is a lot of overlap between categories. All in all, people are satisfied with the tools. If we look at the actual number of tools, we have placed in each category based on their core functions, retailers primarily sell tools in the “protection” and “discovery” categories. If you are a retailer, be careful. There is plenty of room to help organizations through the process of identification, response and retrieval.

Metrics in Use

Many people rely on SIEM to integrate event data with other security-related data. SIEM-based communication of event data is one source of SOC metrics, but respondents reported lower levels of satisfaction with the technical environment. SIEM is a technical tool from which a lot of metrics can be extracted. It is wise to see how this event data association conducts our evaluation of SOC performance. We can easily calculate logos, and this is where most people stand by their metrics.

Going strong with numbers, has not changed much for SOC executives over the years. However, just staying in place against these powerful currents is astonishing, given the rapid movement of key business applications in cloud-based services, the growing business use of “smart” technologies that drive high levels of various technologies, and total global technological complexity.

Kanoo Elite, with its years of experience in providing Security and Enhancing Security Frameworks, is your only stop to help achieve SOC compliance. We use security automation to be successful and successful. By combining highly qualified security analysts with security automation, we increase statistical power to improve your security and better protection against data breaches and cyberattacks.