Organizations are using real-time analytics in more of their processes because of the pressure to increase the speed, accuracy, and effectiveness of their decisions — particularly for digital business acceleration, continuous intelligence, and the Internet of Things (IoT). Almost any analytics, BI or AI product can be used for near-real-time analytics as long as it uses current input data. Decision makers often have limited situation awareness and lack a common fact base across their organizations because traditional business applications do not provide enough real-time data from sources outside of their local business units. Too much information can be as damaging as too little information to busy people charged with making real-time or near-real-time decisions.

This whitepaper guides Data and Analytics leaders to adopt the best practices:

- to maximize the quality and effectiveness of their real-time analytics strategies,

- improve the precision and effectiveness of real-time decisions by reengineering decisions to use the appropriate combination of reporting, alerting, machine learning (ML), event stream processing, optimization and other AI tools operating on real-time data,

- Improve organization wide situation awareness by sharing all relevant real-time data across organizational, geographic and system boundaries throughout the organization and its ecosystem,

- And reduce the risk of flaws in real-time analytics solutions.

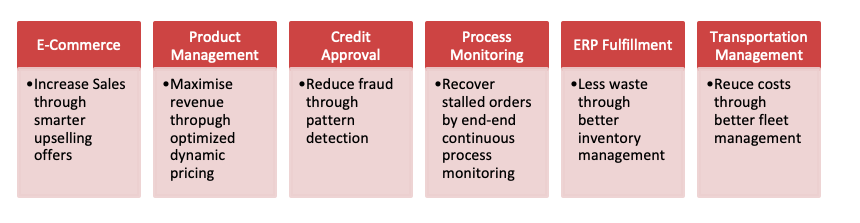

Examples of Real-time Analytics

As systems use more diverse kinds of data and become technically more sophisticated in their use of business intelligence and data science tools, the need for professional data and analytics teams to be involved will be ever increasing and this guide highlights the top practices that data and analytics professionals should employ when designing and implementing real-time analytics solutions.

Analysis

Determine the “Right Time” for Analytics

Organizations use real-time analytics to respond quickly to events as they occur or as soon as they can be predicted. The system may be real-time in a strict engineering sense, or it may be only “business real-time,” which is also called “near-real-time.” Calculations are performed quickly, and at least some of the input data is fresh. Real-time systems generally also use some older data in the analysis to provide context and complement and enrich new data.

Real time is not always appropriate. The “right time” for analytics in a business situation may be anywhere from sub second (real time) to a year or more latency, depending on many considerations. In each situation, data and analytics professionals and business analysts should work with business managers and other decision makers to determine whether real-time analytics would be beneficial.

The two primary considerations are:

- How quickly will the value of the decision degrade? Analytics should be real-time if:

- A customer is waiting on the phone for an answer

- Resources would be wasted if they sit idle

- Fraud would succeed

- Physical processes would fail if the decision takes more than a few milliseconds or minutes

- Will a decision be better if more time and effort are spent on the analysis? Simple, well-understood decisions on known topics for which data is readily available can be made quickly without sacrificing accuracy or effectiveness. However, achieving good quality in a complicated decision may require a long decision cycle.

Reengineer Decisions Using the Appropriate Combination of BI, Analytics and AI Tools

Almost any analytics, BI or AI product can be used for (near) real-time analytics, as long as it uses current input data. People often think of real-time analytics as stream analytics on data “in motion,” but only some of it is. Event stream processing (ESP) platforms commonly implement stream analytics for situation awareness (descriptive analytics). An increasing number of ESP applications embed predictive ML inferencing as part of their algorithms.

Analytics and BI (ABI) platforms, such as Looker for Google Cloud Platform, Microsoft Power BI, Qlik and Tableau, typically work with non-real-time data that is hours, days or weeks old. However, these tools are sometimes used for near-real-time dashboards or embedded near-real-time displays. Excel has extensive ML and optimization add-ons that enable predictive and prescriptive analytics where relevant.

The process for designing a new ML model is inherently not real-time. A data scientist follows a lengthy, multistep experimental process such as the Cross-Industry Standard Process for Data Mining (CRISP-DM 2), or a modern variation of CRISP-DM that uses augmented analytics. Model development and training take time. In a few emerging applications, a model may also learn or be incrementally retrained in real time; this is known as adaptive machine learning.

Share Relevant Real-Time Data Across Organizational, Geographic and System Boundaries

Systems that share real-time information and analytics across organizational and application system boundaries create a “common operating picture.” This term originated in the military, where the benefits of giving a shared, real-time view of what is happening to all authorized people who participate in a particular operation are obvious. In business, as in the military, a “common operating picture” does not necessarily mean showing identical displays on laptops or smartphones. Information from real-time analytics may need to be selectively withheld for security reasons. Moreover, each person may need to see a different subset of the data, a different level of detail, or a different graphical presentation tailored to the unique decisions they will make. Where possible, end users should be given the ability to tailor their own operating picture, rather than having to wait for analytics professional or application developer to do the work. A growing number of rule engines and analytics tools are designed to make self-service practical.

Through better information sharing, decisions based on real-time analytics can result in more efficient and effective internal operations, better customer service, greater customer satisfaction and smarter cross-selling offers that stand a better chance of being accepted.

Design Systems That Present Only Essential Information at Critical Points in Time

More information is not always better because many people are overwhelmed by the volume of data that is presented to them. The problem of “infoglut” is particularly acute among people charged with making real-time operational decisions. Organizations are increasingly aware that a person’s attention is a scarce resource that should be conserved by careful system design. The ultimate strategy to conserve human attention is to automate the decision and response to the situation. Automated decisions are also faster, less expensive, more consistent and more transparent than human decisions.

When real-time analytics are used to support or augment human decisions, the system designer must determine what information is essential to the decision. This is best accomplished by developing a decision model. A decision model specifies the input data, decision algorithm and kinds of output results that may occur. It deliberately ignores data that is not essential to the decision so a minimum amount of data is shown to the person (decision models also apply to automated decisions).

Many business operations are monitored by real-time dashboards that constantly refresh throughout the business day or even 24/7 (i.e., continuous intelligence). In other cases, real-time analytics are executed only on request when a person wants to make a decision or inquire into the status of an operation. Alert thresholds must be carefully designed to minimize false negatives (i.e., when the system fails to send an alert in a problem situation) and false positives (i.e., when the system sends an alert although there is no problem). False positives result in alert fatigue and people stop paying attention to the alerts.

Build Guardrails into Automated and Human Decisions

Because of the speed and, often, the scale at which real-time decision-making systems work, data and analytics leaders should ensure there is a mechanism to verify the reasonableness of analytically generated decisions before they are put into action. After years of obvious problems from misbehaving systems, it is surprising how many systems lack simple guardrails that could minimize errors.

The idea of system guardrails is to create secondary analytic components that check the output of the primary analytic systems or a person’s intended decision. The secondary system can be designed to monitor the quality of decisions via range checks, circuit breakers and other logic. These typically entail one or more sets of rules or scoring models (or a combination of these) that evaluate a decision before an action takes place. The secondary system’s alternative logic determines whether the primary system’s output makes sense.

The secondary (guardrail) component can review either human- or machine-made decisions. Human decisions are checked for human error, policy compliance, fraud, and deliberate sabotage. Machine decisions are checked for computational errors and adherence to common sense.

Businesses are becoming increasingly digital. Real-time big data analytics must handle growing quantities and diversity of data. Kanoo Elite with its years of experience in gearing up organizations with real time data analytics and coupled with different technologies, we help meet these demands.

We provide specialized services inclusive of appliances (hardware and software systems), special processor/memory chip combinations, and in-database analytics (the database has analytics capabilities embedded in it). Kanoo Elite’s real-time analytics application and solutions are simply designed to leverage the full power of standard processors and memory, and help you analyse data of any structure directly within the database, giving you results in real time, and without expensive data warehouse loads.